The Google Multitask Unified Model (MUM) has the potential to reduce the number of queries a user needs to run to answer one specific question by a factor of 8.

It does that by using a natural language processing (NLP) model that Google research scientists have dubbed the T5: Text-to-Text Transfer Transformer.

The 11-billion-parameter T5 model reframes all NLP tasks into a text-to-text format in which inputs and outputs are always text strings, which makes the model usable for any NLP task including:

- Machine translation

- Document summarization

- Question answering

- Sentiment analysis

- Regression analysis

In finding the best response to a query, MUM uses its AI-powered algorithm to search relevant materials in 75 languages, and returns a result in the language of the original query.

MUM can also accept as query input a combination of text and images - and Google plans also to train the model to search for information in audio and video recordings.

These multilingual and multi-media capabilities are what make the model able to reduce the number of individual queries needed to get sufficient information to answer the original query by a factor of 8.

Implications for SEO

- Using keywords in a natural context becomes more important.

- Understanding user intent and how people translate it into queries will be key.

- Images - and later audio and video - will become more important content elements.

- Competition will increase across industries, markets and products as MUM incorporates information from other countries and languages into query responses.

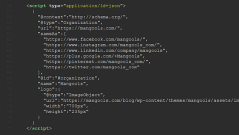

- Even more than with BERT, effective technical SEO - including structured data markup that helps MUM understand your content - will give you an edge.

Want SEO help? Let's talk.

- David

- David

Comments on Google MUM has the potential to be a game-changer in search and SEO